Refaçade : Editing Object with Given Reference Texture

Youze Huang1,* Penghui Ruan2,* Bojia Zi3,* Xianbiao Qi4,† Jianan Wang5 Rong Xiao4

1 University of Electronic Science and Technology of China 2 The Hong Kong Polytechnic University 3 The Chinese University of Hong Kong 4 IntelliFusion Inc. 5 Astribot Inc.

* is equal contribution. † is the corresponding author.

Abstract

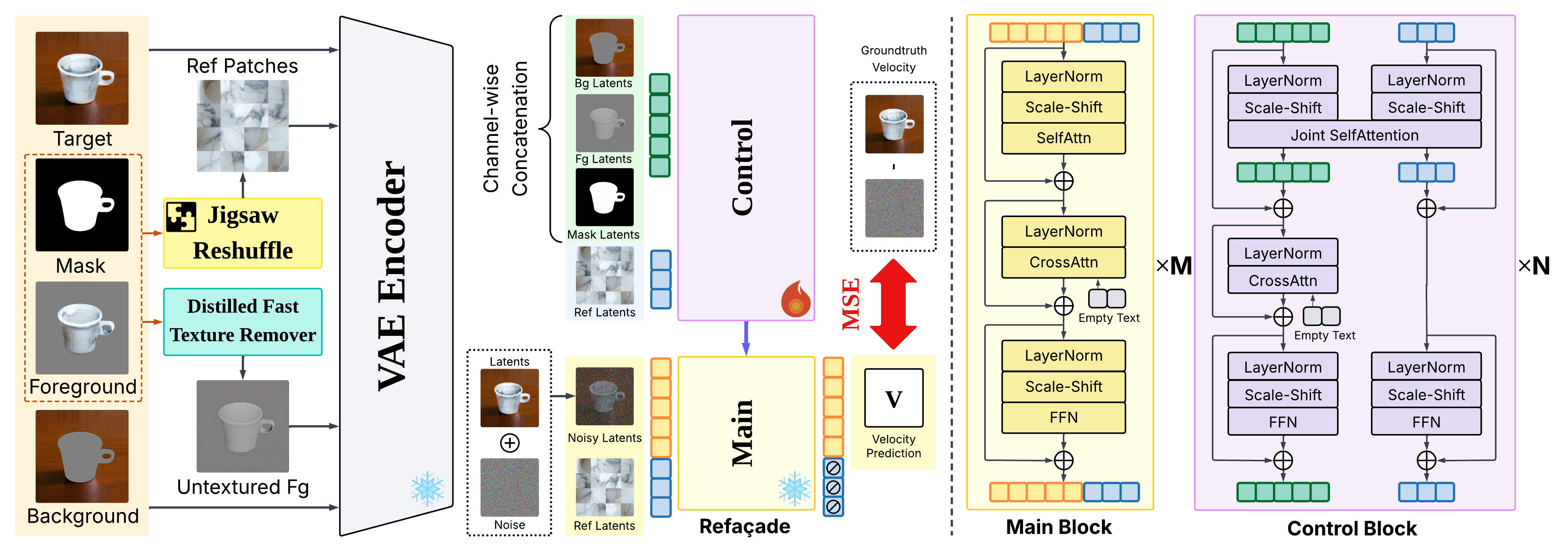

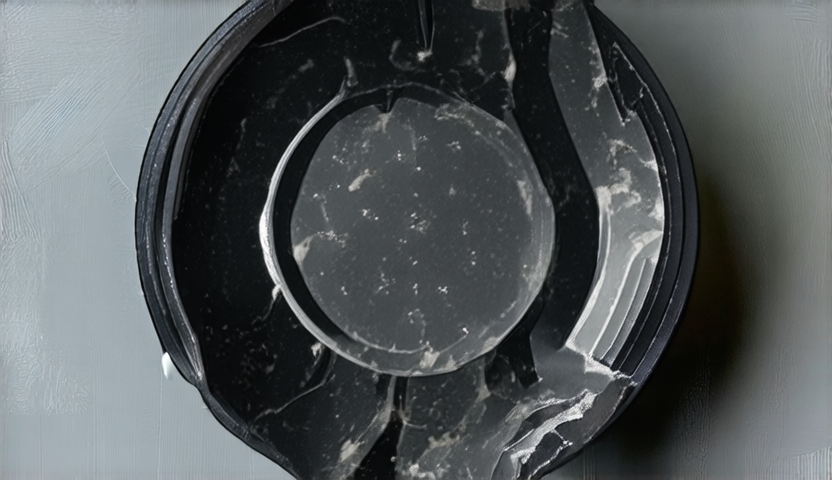

Recent advances in diffusion models have brought remarkable progress in image and video editing, yet some tasks remain underexplored. In this paper, we introduce a new task, Object Retexture, which transfers local textures from a reference object to a target object in images or videos. To perform this task, a straightforward solution is to use ControlNet conditioned on the source structure and the reference texture. However, this approach suffers from limited controllability due to two reasons: conditioning on the raw reference image introduces unwanted structural information, and this method fails to disentangle visual texture and structure information of the source. To address this problem, we proposed a method, namely Refaçade, that consists of two key designs to achieve precise and controllable texture transfer in both images and videos. First, we employ a texture remover trained on paired textured/untextured 3D mesh renderings to remove appearance information while preserving geometry and motion of source videos. Second, we disrupt the reference’s global layout using a jigsaw permutation, encouraging the model to focus on local texture statistics rather than global layout of object. Extensive experiments demonstrate superior visual quality, precise editing, and controllability, outperforming strong baselines in both quantitative and human evaluations.

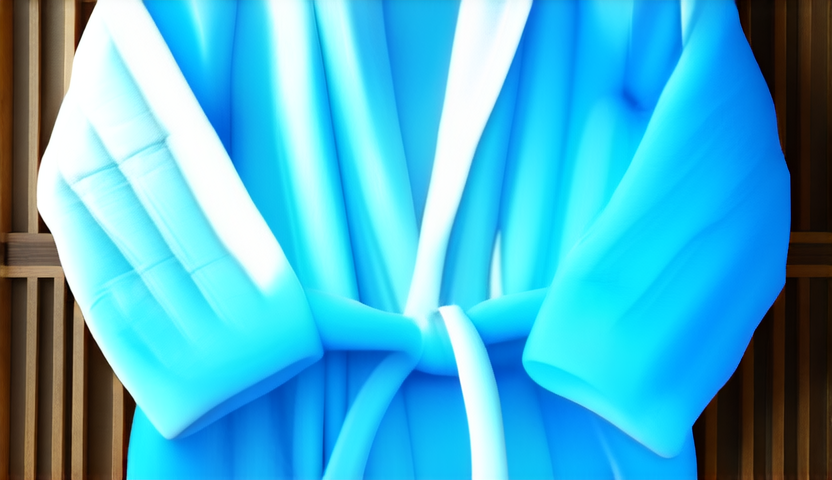

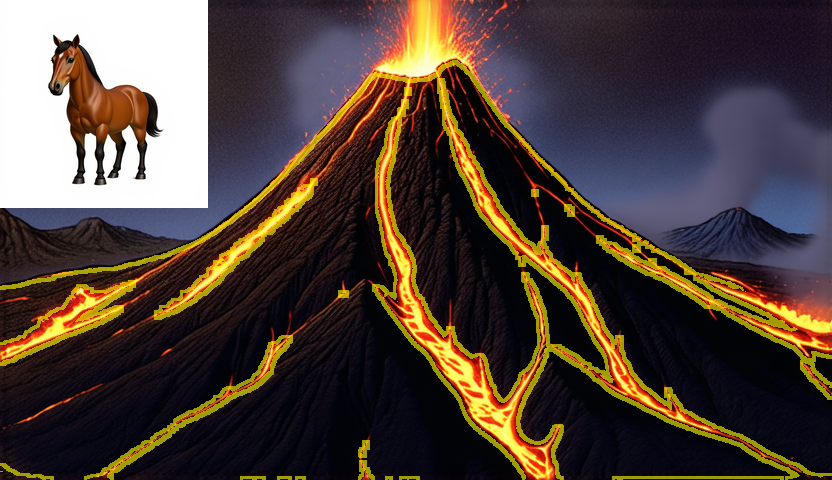

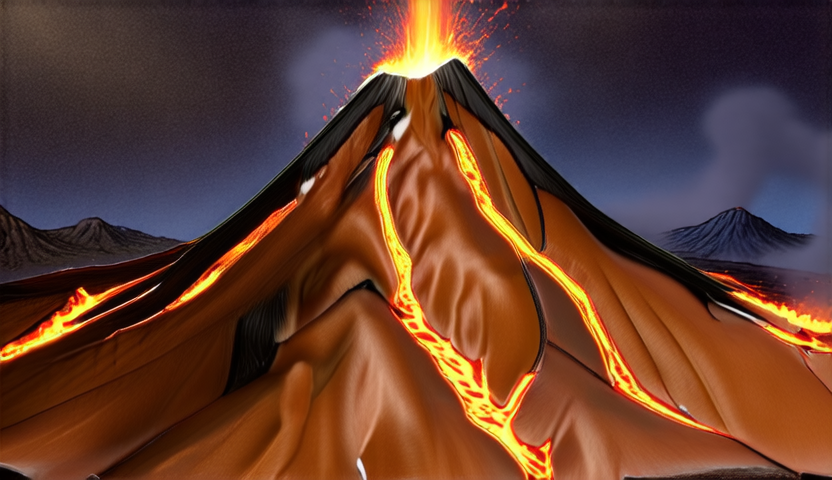

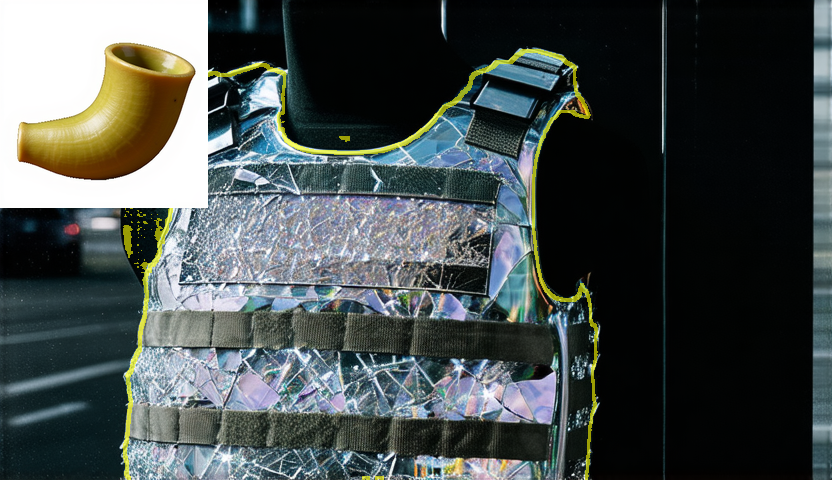

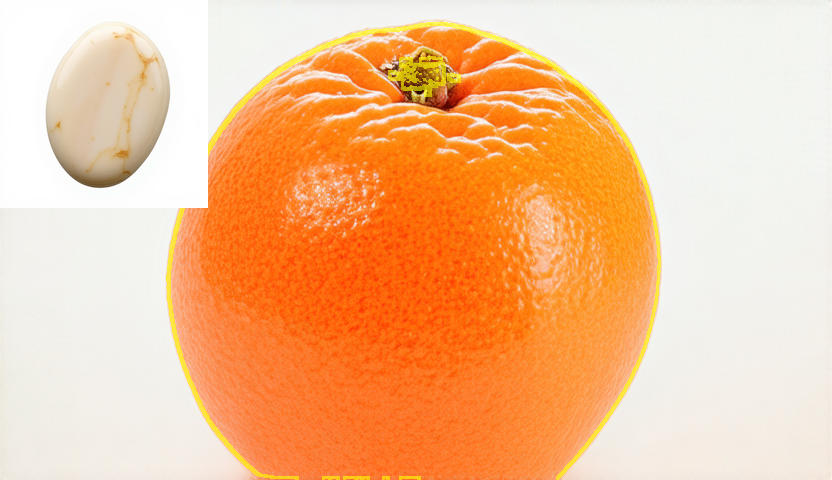

Image Results

Video Results